Fingerprint comparisons are often portrayed as straightforward in TV crime shows, but the forensic fingerprint community knows that latent print comparisons can be a complex process with significant variations in the degree of difficulty involved in specific comparisons.

It is precisely this degree of difficulty issue that this research project, led by Jennifer Mnookin, dean of the UCLA School of Law, addressed in an effort to “establish a scientific methodology to quantify the accuracy and error rates of forensic evidence,” particularly latent fingerprint identification. To that end, the researchers set out to develop an accurate and quantitative metric for measuring the difficulty of latent fingerprints for any given comparison. Is there an objective way, the researchers asked, to determine how difficult it would be to measure a particular set of fingerprints?

Acknowledging that a number of studies have been done exploring the accuracy of fingerprint examiners under experimental conditions, the researchers attempted to define the factors involved in a range of fingerprint comparisons, rate the associated degree of difficulty for each, and then link the difficulty rating to a predicted error rate.

The researchers noted that being aware that some fingerprint comparisons are highly accurate whereas others may be prone to error, “demonstrates that error rates are indeed a function of comparison difficulty.” “Because error rates can be tied to comparison difficulty,” they said, “it is misleading to generalize when talking about an overall error rate for the field.”

Fingerprint comparisons as currently done involve analysis by experts, so the researchers asked how capable fingerprint examiners are at assessing the difficulty of the comparisons they make. Can they evaluate the difficulty of a comparison? A related question is whether latent print examiners can tell when a print pair is difficult in an objective sense; that is, whether it would broadly be considered more or less difficult by the community of examiners.

Those issues are linked to a broader psychological issue of “metacognition,” an individual’s awareness of his or her own cognition. “Instead of simply looking at how well examiners perform on a given task,” the researchers said, “we are interested in whether they have the meta-awareness, regarding both their own performance and the potential performance of fingerprint examiners more generally on that print.”

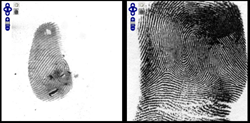

To develop their “hierarchy of fingerprint comparison difficulty,” the researchers created a database by collecting prints from 103 fingers. Each print was first taken using ink, as is standard practice in police stations, to make the print as clear as possible. The person who contributed that print was then asked to use the same finger to touch a number of surfaces in a variety of ways to create a range of latent prints typical of those found at a crime scene. The latent prints were lifted using powder, then scanned with an imaging system.

The researchers settled on 200 latent and known print pairs for the study, half of which were a match and half of which were a close non-match. Using those pairs in various combinations, 56 fingerprint experts each made match/non-match judgments for each print and provided confidence and difficulty ratings, for a total of 2,282 trials, or comparisons. The overall accuracy across all of the trials was 91 percent.

“On average,” the researchers said, “examiners were generally able to recognize when they were likely to make an error on a comparison, and in aggregate were able to recognize when other examiners were likely to err as well.” The researchers said their study, “demonstrates that error rates are indeed a function of comparison difficulty.” The research also provided “strong evidence” that prints vary in difficulty and that the variations affect the likelihood of error in making comparisons. “Even though it was a logical assumption that print comparisons would have this quality, establishing this point empirically has significant value.”

The study also lays a foundation for finding objective print characteristics that can quantify the difficulty of a comparison and illustrates the benefits of creating objective measures of difficulty for print pairs. Finally, the researchers said that their work indicates the “feasibility of an automated system that could grade the difficulty of print comparisons and predict likely error rates.”

About This Article

The research described in this article was funded by NIJ cooperative agreement number 2009-DN-BX-K225, awarded to the University of California Los Angeles (UCLA).

This article is based on the grantee report Error Rates for Latent Fingerprinting as a Function of Visual Complexity and Cognitive Difficulty (pdf, 59 pages) by Jennifer Mnookin, Philip J. Kellman, Itiel Dror, Gennady Erlikhman, Patrick Garrigan, Tandra Ghose, Everett Metler, and Dave Charlton.