Bloodstain patterns can be pivotal evidence, with the power to exculpate or exonerate defendants in high profile cases. Commonly found at the scene of violent crimes, bloodstains are used by experts to piece together a chronology of events. However, bloodstain pattern analysts have been found to reach contradictory conclusions regarding the same evidence, as in, for example, the David Camm case, where the same evidence was used to conclude that the bloodstain on the defendant’s clothing was backspatter from a gunshot or alternatively a transfer stain from assisting the victims. [1, 2, 3]

Although admissible in court for more than 150 years [4], the validity of bloodstain pattern analysis has recently been questioned. In 2009, a National Research Council report called for more objective methods in forensic science and a better understanding of the error rates of current practices.[5] They specifically cited the analysis of bloodstains, saying that “the uncertainties associated with bloodstain pattern analysis are enormous” and “the opinions of bloodstain pattern analysts are more subjective than scientific.” This was echoed in a 2016 report from the President’s Council of Advisors on Science and Technology (PCAST).[6]

Researcher Austin Hicklin and his colleagues at Noblis, a not-for-profit research institute, set out, with funding from NIJ, to assess the validity of bloodstain pattern analysis by measuring the accuracy of conclusions made by actively practicing analysts. In what is now the largest study of its kind, they applied their extensive experience in executing “black box” studies of fingerprint, handwriting, and forensic footwear examination to bloodstain pattern analysis.

Contradictory Conclusions and Corroboration of Errors

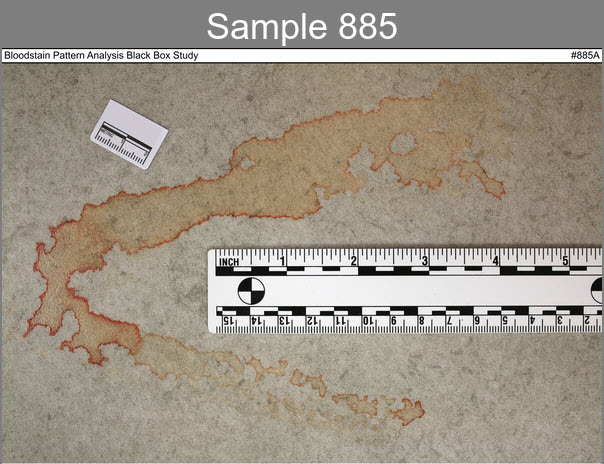

The Noblis researchers recruited 75 practicing bloodstain pattern analysts to examine 150 distinct bloodstain patterns (see Figure 1) over a period of four months. The study included 192 bloodstain images that were collected in controlled laboratory settings as well as operational casework. The researchers planned to measure the overall accuracy, reproducibility, and consensus of the group, and compare performance between participants by their background and training.

Unlike previous black box studies performed by Noblis, bloodstain pattern analysis did not have well-established conclusion standards used throughout the community, so the researchers had to establish a set of conclusions for the participants to use. [7] They developed three complementary approaches to collect participants’ assessments: brief summary conclusions (dubbed “tweets”), classifications, and open-ended questions. Each participant received requests for a mix of the three types of responses.[8]

The researchers found that 11% of the time the analysts’ conclusions did not match the known cause of the bloodstain. Although the consensus, or average group response, was generally correct (responses with a 95% supermajority were always correct), the rate at which any two analysts’ conclusions contradicted each other was not negligible, at about 8%. Focusing specifically on the incorrect responses, the researchers found that those same errors were corroborated by a second analyst 18% to 34% of the time. The error rates measured in this work are consistent with previous studies.[9,10] Participants’ background or training were not associated with performance.

Because the conclusions reached by participants were sometimes erroneous or contradicted other analysts, this suggests potentially serious consequences for casework — especially regarding the possibility of conflicting testimony in court. In the absence of widely accepted criteria for conclusion classifications, one cannot, the researchers argue, expect high rates of reproducibility among analysts.

Importantly, disagreements among analysts were often the result of semantics. This lack of agreement on the meaning and usage of bloodstain pattern terminology and classifications underscores the need for improved consensus standards. Experts on the study team also felt that many conclusions expressed an excessive level of certainty given the sparsely provided available data.

Caution Against Generalizing to Operational Error Rates

The Noblis study differed from operational casework in that the analysts were asked to provide responses based solely on photographs and were not provided additional case-relevant facts that may have influenced their assessments. Moreover, the means of reporting conclusions were different from the manner in which bloodstain pattern analysts typically report their conclusions.

Given these notable differences, Hicklin and his team caution that the results should not be taken as precise measures of operational error rates; the error rates reported describe the proportion of erroneous results for this particular set of images with these particular patterns. It should not be assumed that these rates apply to all bloodstain pattern analysts across all casework. Rather, the work is intended to provide quantitative estimates that may aid in decision making, process and training improvements, and future research.

About This Article

The work described in this article was supported by NIJ award number 2018-DU-BX-0214, awarded to Noblis, Inc.

This article is based on the grantee report “Black Box Evaluation of Bloodstain Pattern Analysis Conclusions” (pdf, 111 pages), by R. Austin Hicklin (Noblis), Kevin R. Winer (Kansas City Police Department Crime Laboratory), Paul E. Kish (Forensic Consultant), Connie L. Parks (Noblis), William Chapman (Noblis), Kensley Dunagan (Noblis), Nicole Richetelli (Noblis), Eric G. Epstein (Noblis), Madeline A. Ausdemore (Noblis), Thomas A. Busey (Indiana University).

See also the article "Accuracy and reproducibility of conclusions by forensic bloodstain pattern analysts," submitted by the research team and published in Forensic Science International.